Hello readers, I’m no longer posting new content on gafferongames.com

Please check out my new blog at mas-bandwidth.com!

Hi, I’m Glenn Fiedler and this is my GDC 2019 talk called “Fixing the Internet for Games”.

It’s about what we are doing at my new startup Network Next.

When you launch a multiplayer game, some percentage of your player base will complain they are getting a bad experience.

You only need to check your forums to see this is true.

And as a player you’ve probably experienced it too.

What’s going on?

Is it your netcode, or maybe your matchmaker or hosting provider?

Can you fix it by running more servers in additional locations, or by switching to another hosting company?

Or maybe you’ve done all this already and now you have too many data centers, causing fragmentation in your player base?

It turns out that you can do all these things perfectly yet some % of your player base will still complain.

The real problem is that you don’t control the route from your player to your game server, and sometimes this route is bad.

This happens because the internet is not optimized for what we want (lowest latency, jitter and packet loss)

No amount of good netcode that you write can compensate for this.

The problem is the internet itself.

The internet doesn’t care about your game.

The internet thinks game traffic is the same as checking emails, visiting a website or watching netflix.

But game traffic is real-time and latency sensitive. It’s not the same.

It’s interactive so it can’t be cached at the edge and buffered like streamed video.

Networks that participate in the internet do hot potato routing, they just try to get your packets off their network as fast as possible so they don’t have to deal with it anymore. Nobody is coordinating centrally to ensure that packets are delivered with the lowest overall latency, jitter and packet loss.

Sometimes ISPs or transit providers make mistakes and packets are sent on ridiculous routes that can go to the other side of the country and back on their way to a game server just 5 miles away from the player… you can call up the ISP and ask them to fix this, but it can take days to resolve.

Even from day to day, performance is not consistent. You can get a good route one day, and a terrible one the next.

For all of these problems, players tend to blame you, the developer. But it’s not actually your fault.

What can you, the game developer, do about this?

One common approach is to try running as many servers in as many locations as possible, with as many different providers as you can.

This seems like a good idea at first, but there is no one data center or hosting company that is perfectly peered with every player of your game, so ultimately, it does not solve the problem.

Flaws:

- Player fragmentation

- Logistics of so many suppliers

- Really difficult to find one data center suitable for party or team that wants to play together

Another option is to host in public clouds. Google’s private network is pretty good, right?

Game developers tend to assume that AWS, Azure and Google peering is perfect. But this is not true.

Flaws:

- Egress bandwidth is expensive

- Locked into one provider

- Transit is not as good as you think

You could also build your own internet for your game.

This is not a joke. It actually happened!

Riot built their own private internet for League of Legends. When you play league of legends, your game traffic goes directly from your ISP onto this private network.

Case study: Riot Direct

- Fixing the internet for real-time applications (part 1)

- Fixing the internet for real-time applications (part 2)

- Fixing the internet for real-time applications (part 3)

Flaws:

- Can you really afford this?

- How many internets do we really need?! :)

Right now, if you are a game developer shipping a multiplayer game, you are competing against companies that have built their own private internet for their game.

What’s the solution? Build your own too?

Does it really make sense to build an internet for each game? This is crazy…

There has to be a way to do this without building your own infrastructure.

Network Next was created to solve this problem.

Network Next steers your game’s traffic across private networks that have already been built, so you don’t have to build your own private internet for your game.

But hold on. Aren’t all the problems at the edge?

People seem to think that all the bad stuff on the internet occurs at the edge of the network, eg. shitty DSL connections, oversubscribed cable networks…

But this is not true.

The backbone itself is not as good as you think it is.

And I’m going to prove it.

Just two regular computers sitting in different data centers… let’s send UDP ping and pong packets between them, so we can measure the quality of the network.

We need some way to measure this quality as a scalar value.

Define cost as the sum of round trip time (rtt) in milliseconds, jitter (3rd standard deviation), and packet loss %.

Lower cost is good. Higher cost is bad.

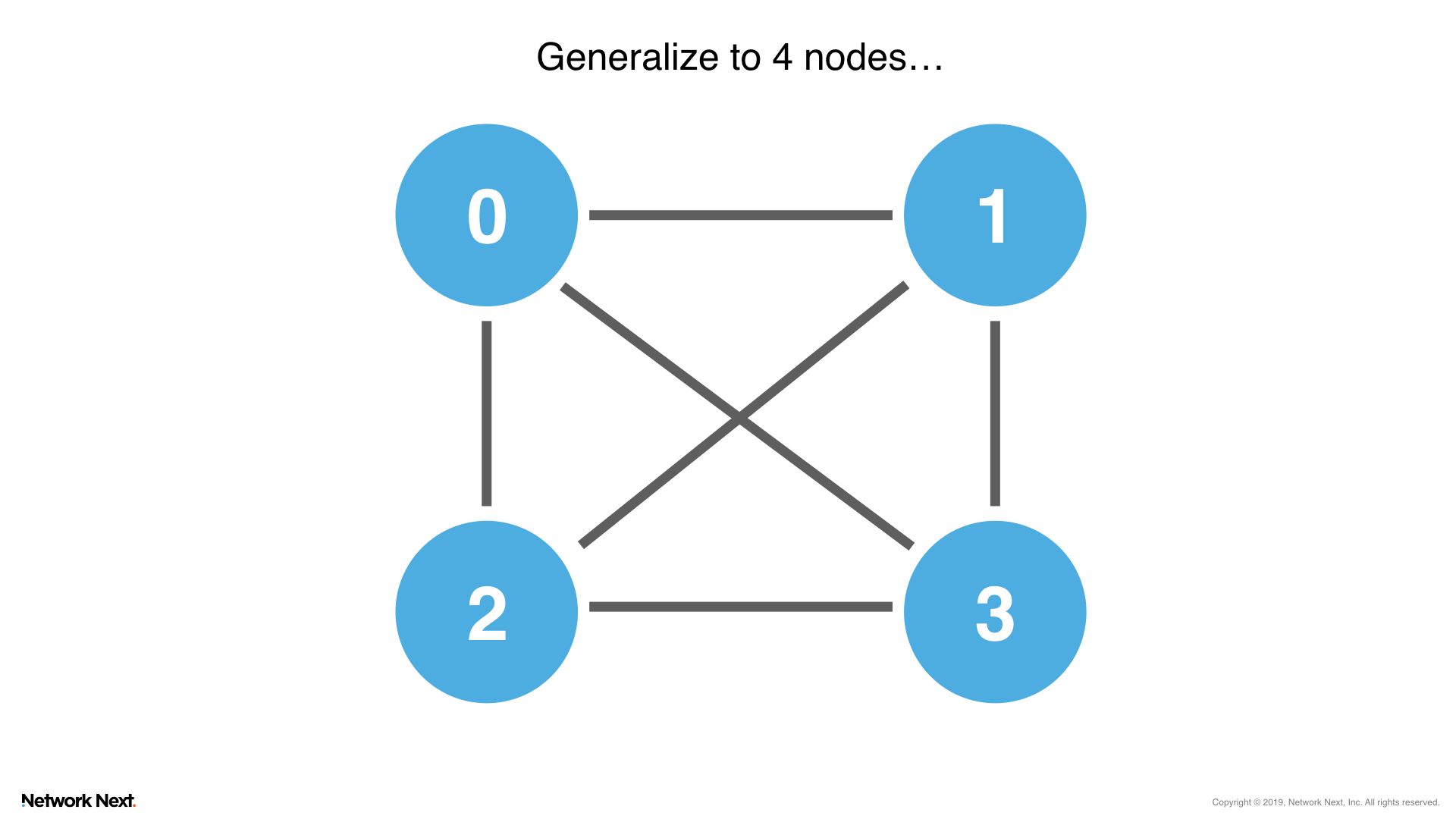

Now let’s generalize to 4 nodes.

We measure cost between all nodes via pings, O(n^2).

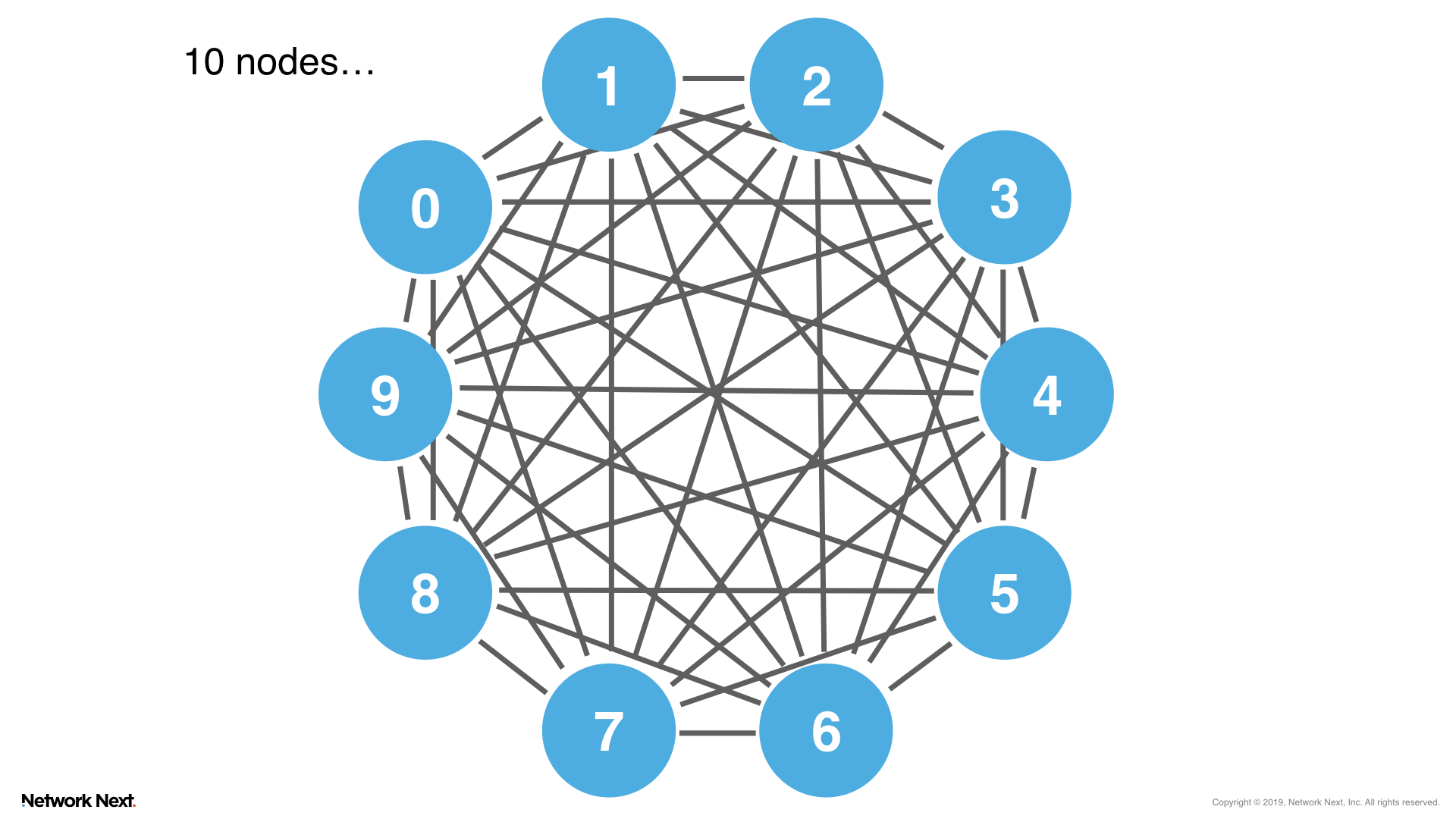

Now let’s go up to 10 nodes.

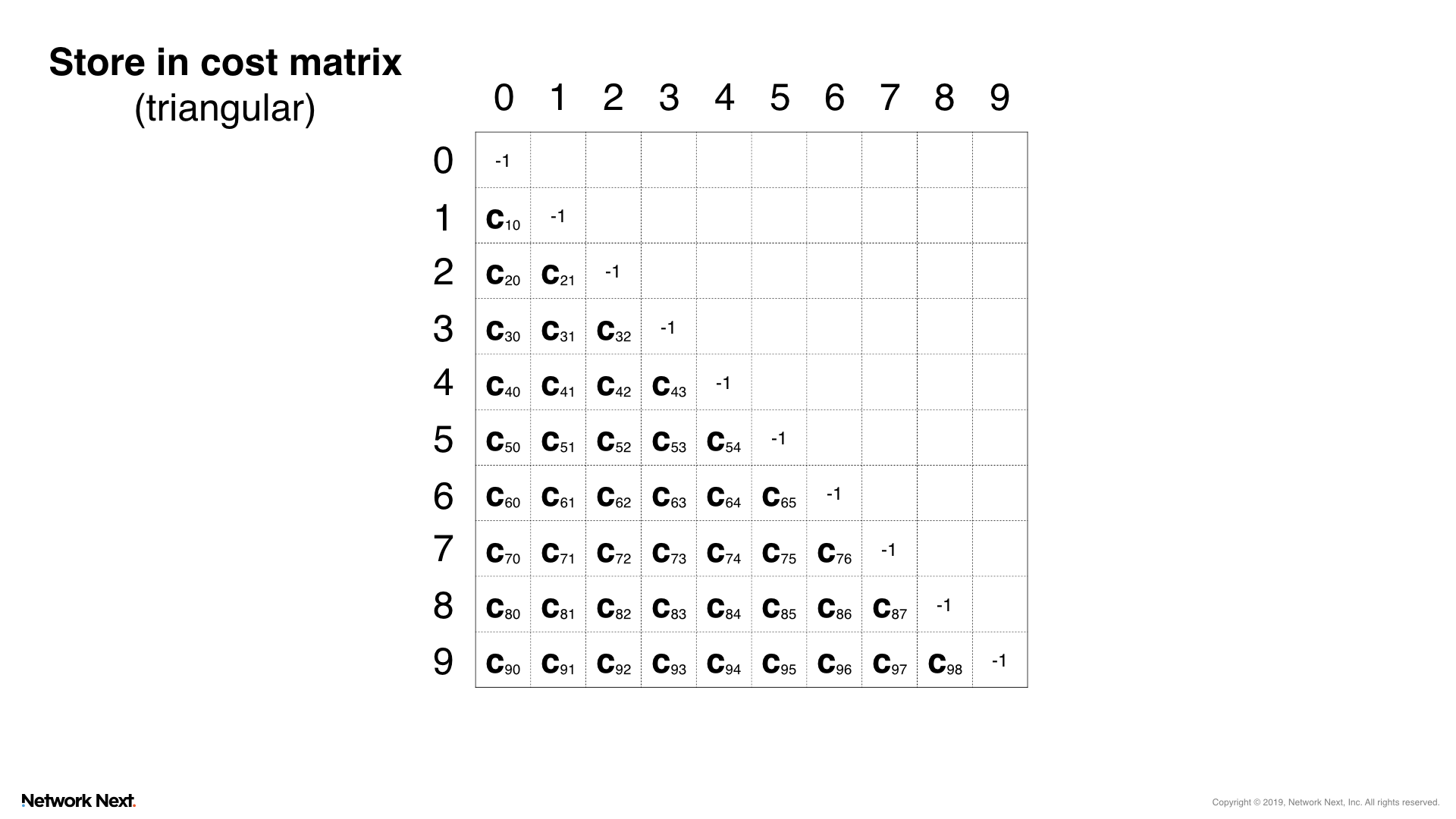

Store the cost between all nodes in a triangular matrix.

Each entry in the matrix is the cost between the node with index corresponding to the column, and node with index corresponding to the row.

The diagonal is -1, because nodes don’t ping themselves.

Now spin up instances in all the providers you can think of and all locations they support in North America.

For example: Google, AWS, Azure, Bluemix, vultr.com, multiplay, i3d, gameservers.com, servers.com and so on.

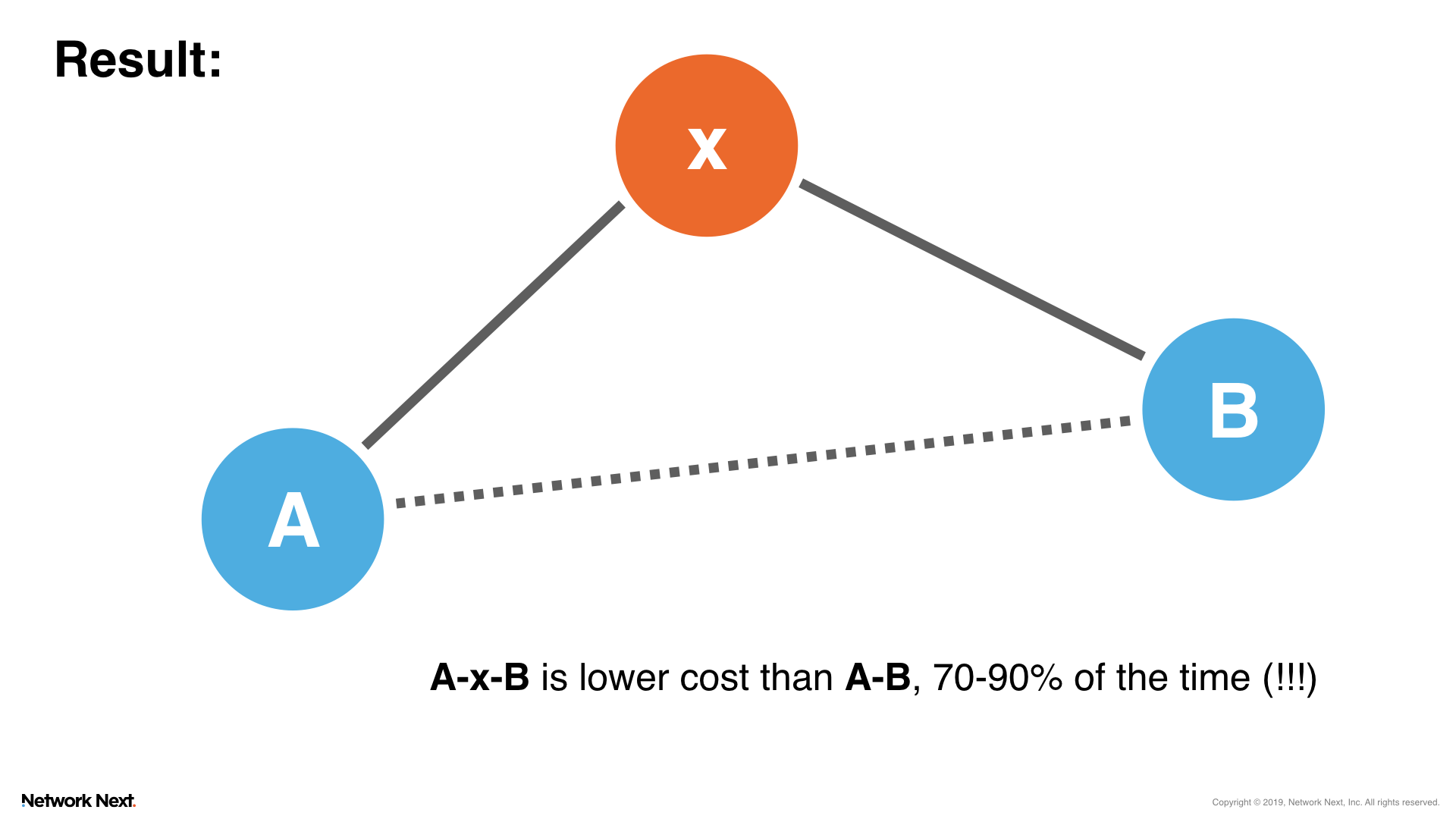

If the internet backbone was perfectly efficient, A-B would be the lowest cost 100% of the time.

Instead, for the worst provider it is only 5-10% of the time.

And the best performing provider only 30% of the time…

Of course, we are not outperforming each provider on their own internal network, those are efficient.

Instead we reveal that each node on the internet is not perfectly peered with every other node. There is some slack, and going through an intermediary node in the majority cases can fix this.

Machines on the backbone are not talking to each other as efficiently as they can.

Talking through an intermediary is often better, in terms of our cost function.

Why? Many reasons… but overall, the public internet is optimized for throughput at lowest cost, not lowest latency and jitter.

What other option is there, aside from the public internet?

Many private networks have been built.

These include CDNs and any corporate entity that has realized the public internet is broken, and have built their own private networks (backhaul) and interconnections to compensate.

(Not many people know this, but this “shadow” private internet is actually growing at a faster rate than the public internet…)

This private internet is currently closed. Your game packets do not traverse it.

How can we open it up?

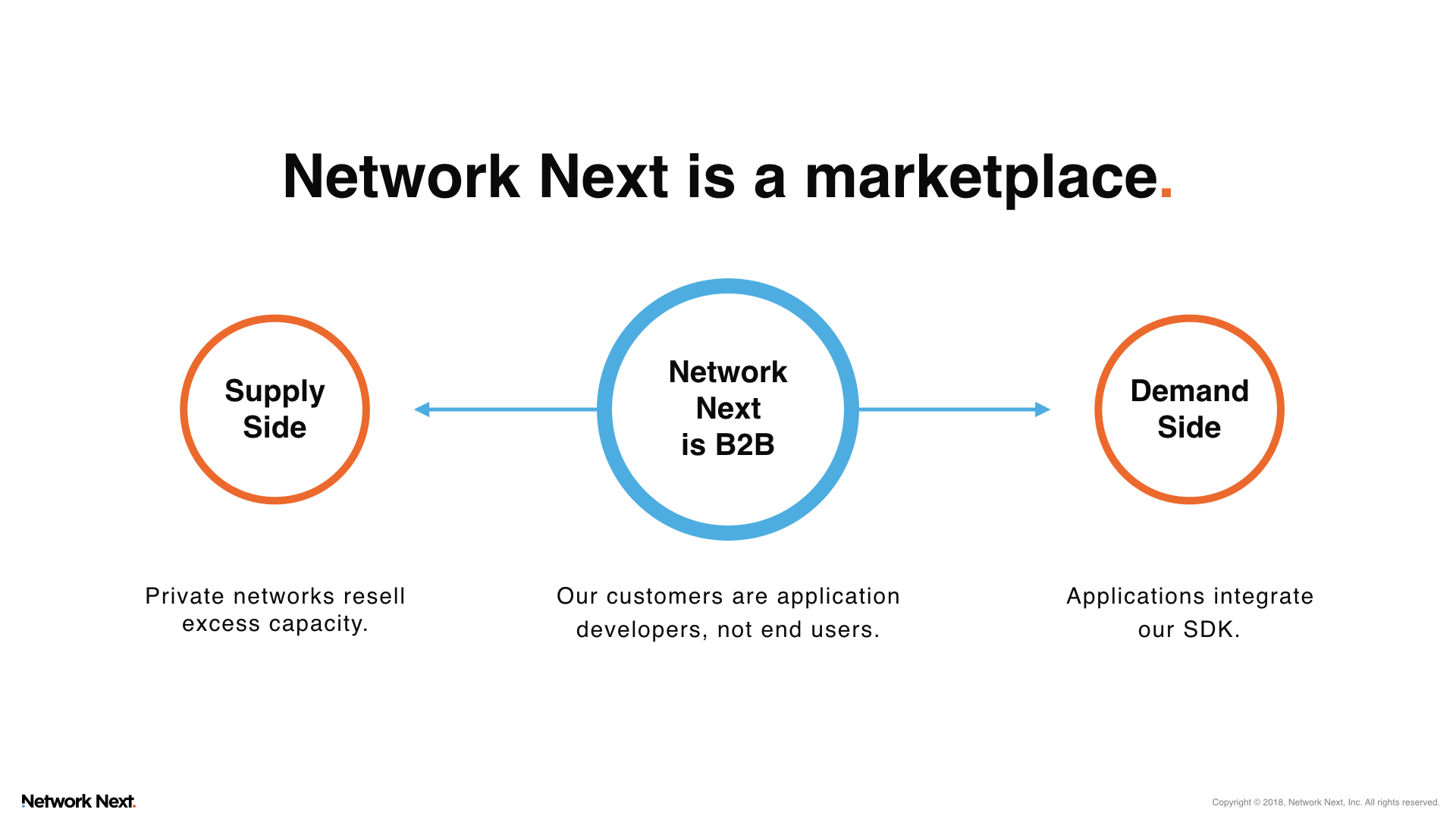

With a marketplace. Network Next is a marketplace where private networks resell excess capacity to applications that want better transit than the public internet.

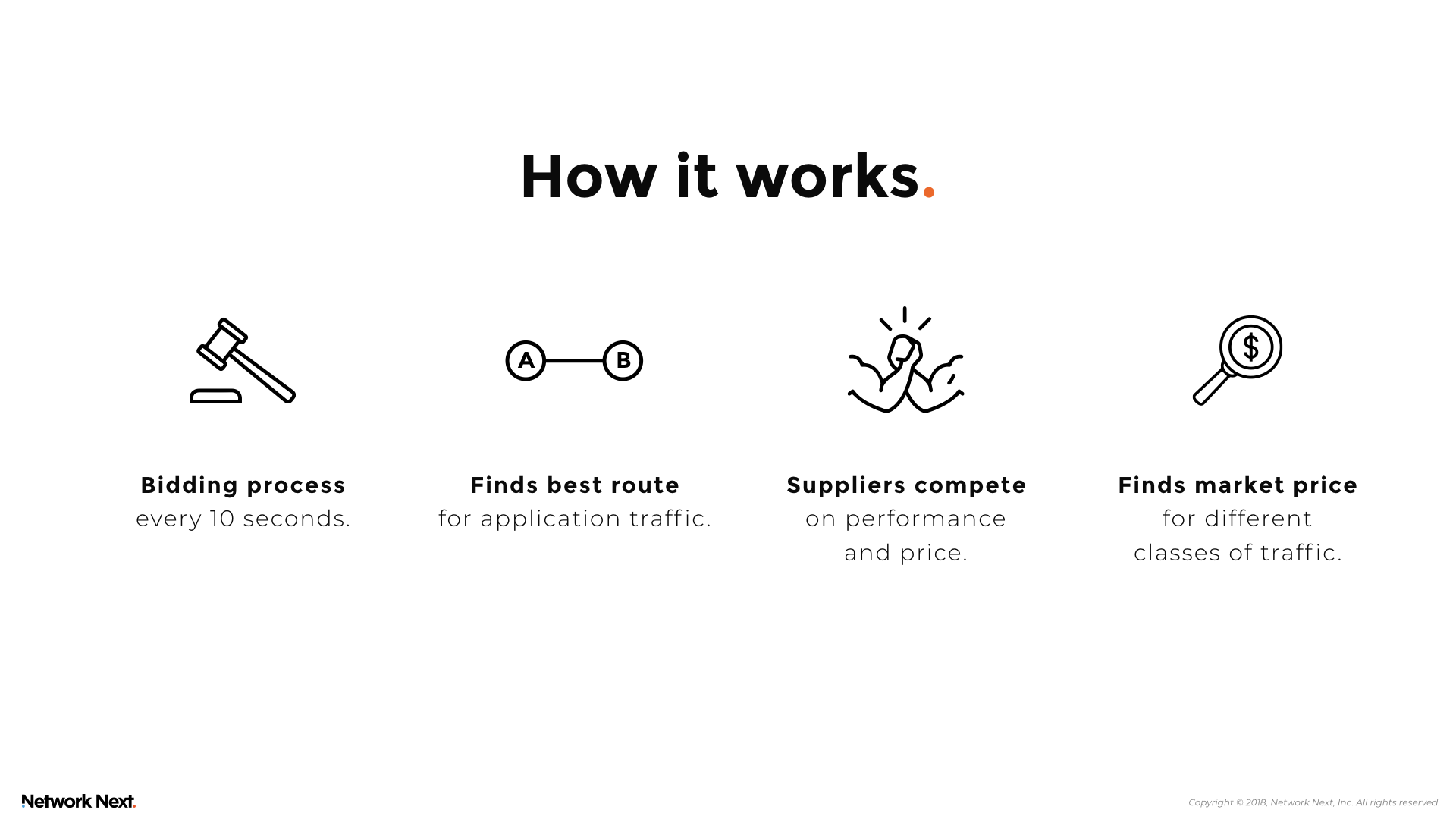

Every 10 seconds, per-player we run a “route shader” as a bid on our marketplace, and find the best route across multiple suppliers that satisfies this request.

Suppliers cannot identify buyers, and can only compete on performance and price.

Thus, Network Next discovers the market price for premium transit, while remaining neutral.

Now let’s see how it works in practice, with real players.

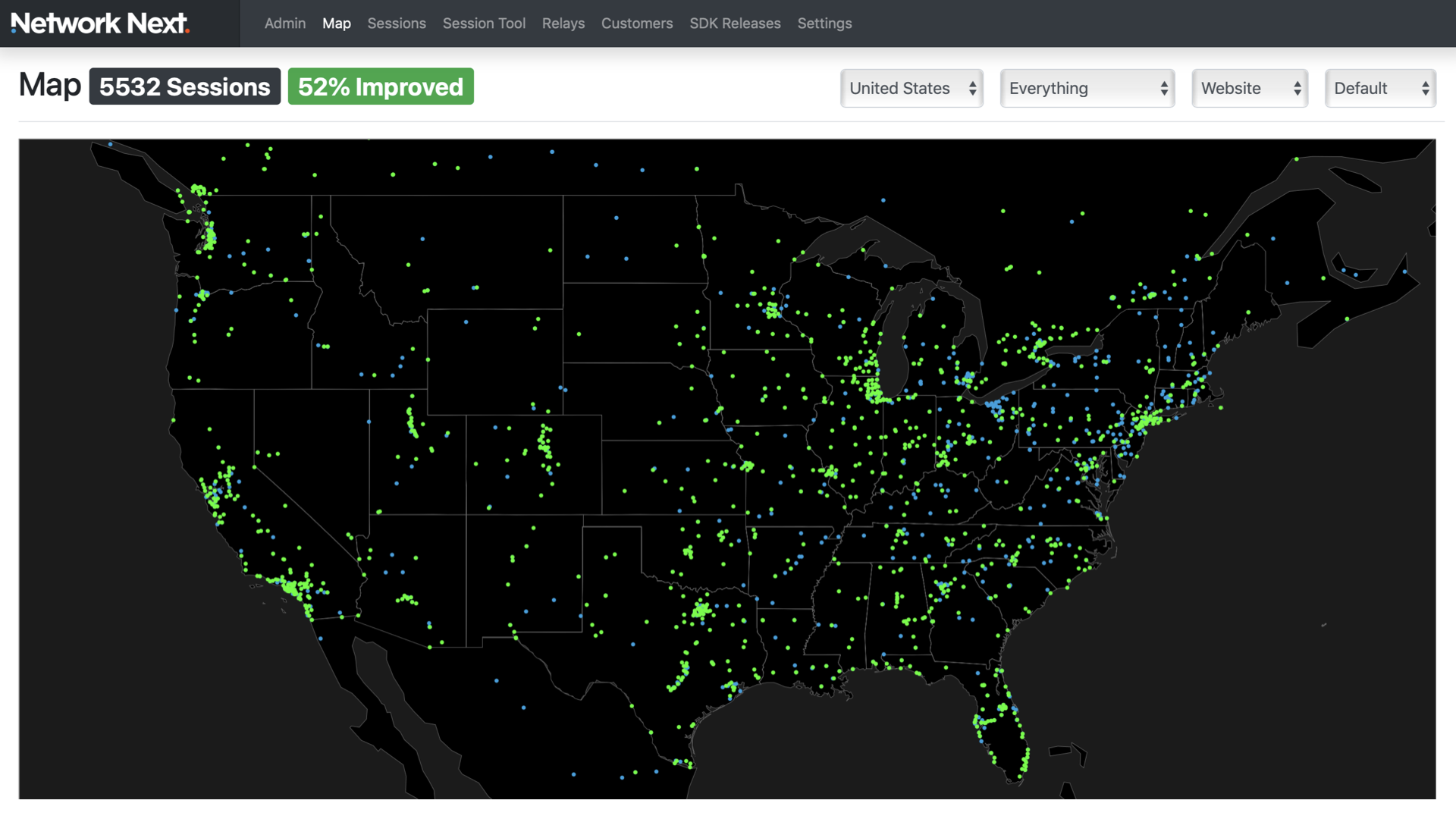

Here is a small sample of connections active at a specific time one night last week…

Each dot is a player. Green dots are taking Network Next, blue dots are taking the public internet, because Network Next does not provide any improvement for them (yet).

Around 60% of player sessions are improved, fluctuating between 50% and 60% depending on the time of day. We believe that as we ramp up more suppliers, we can get the percentage of players improved up to 90%.

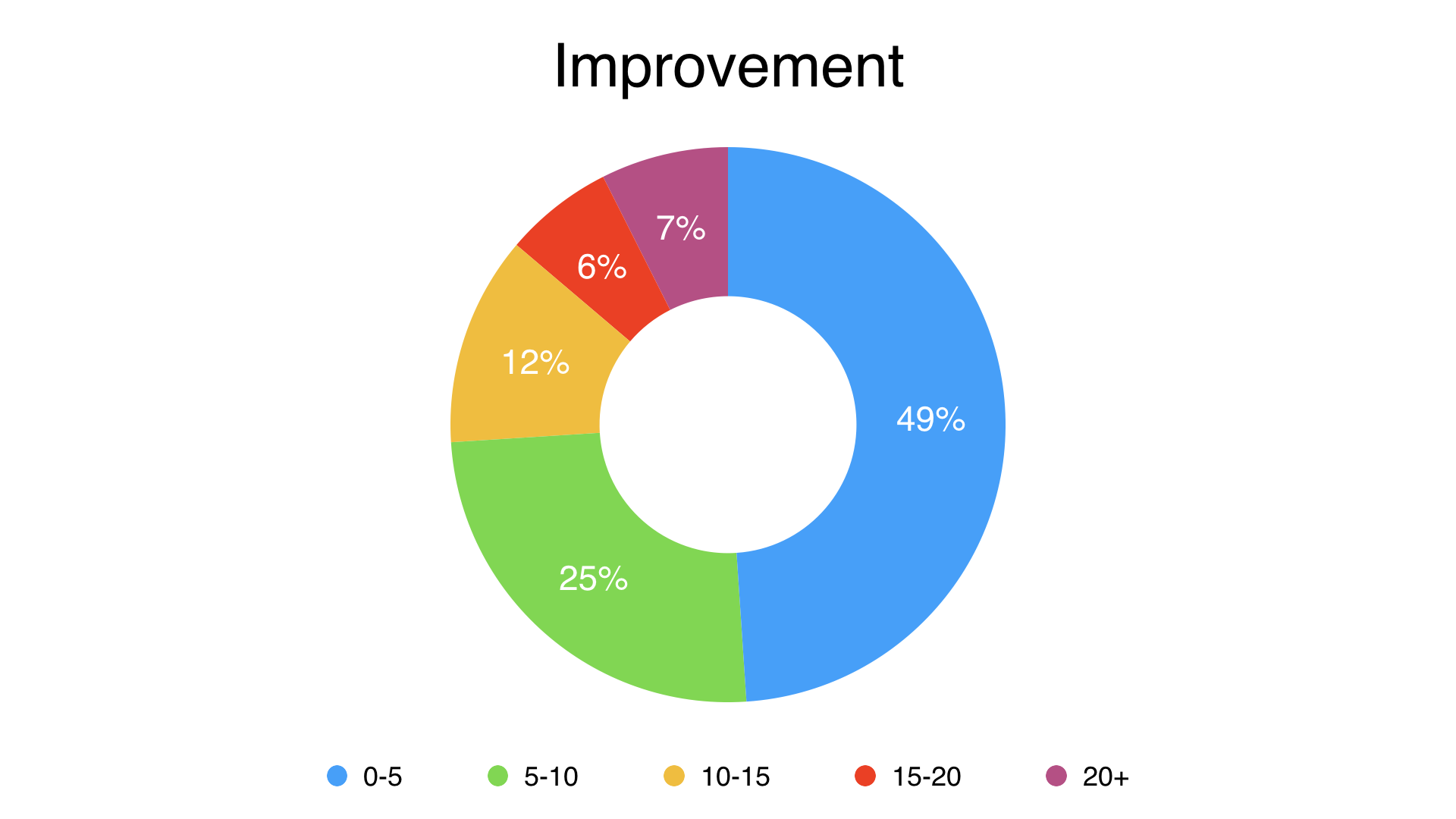

Of the 60% that are currently improved, the improvement breaks down into the following buckets:

49% of sessions had 0-5 cost unit improvement (cost being latency+jitter+packet loss). 25% had 5-10cu improvement. 12% had 10-15cu improvement. 6% had 15-20cu improvement. 7% had greater than 20cu improvement…

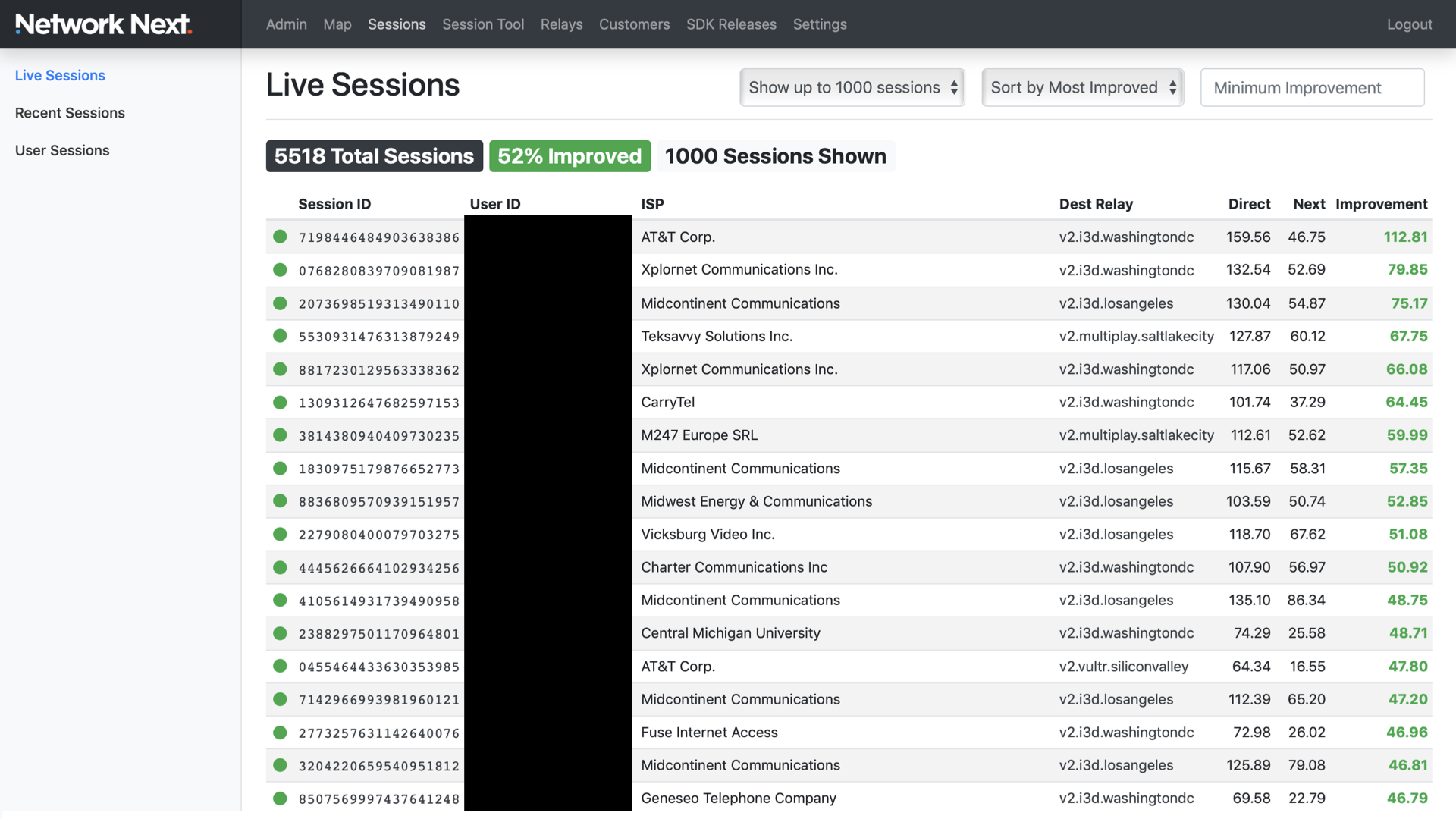

Here is a look at live sessions at a random time that night. Look at the rightmost column. Some players are getting an incredible improvement… there are always some players getting improvements like this at all times of the day…

(My apologies for the black censoring, it is necessary for GDPR compliance).

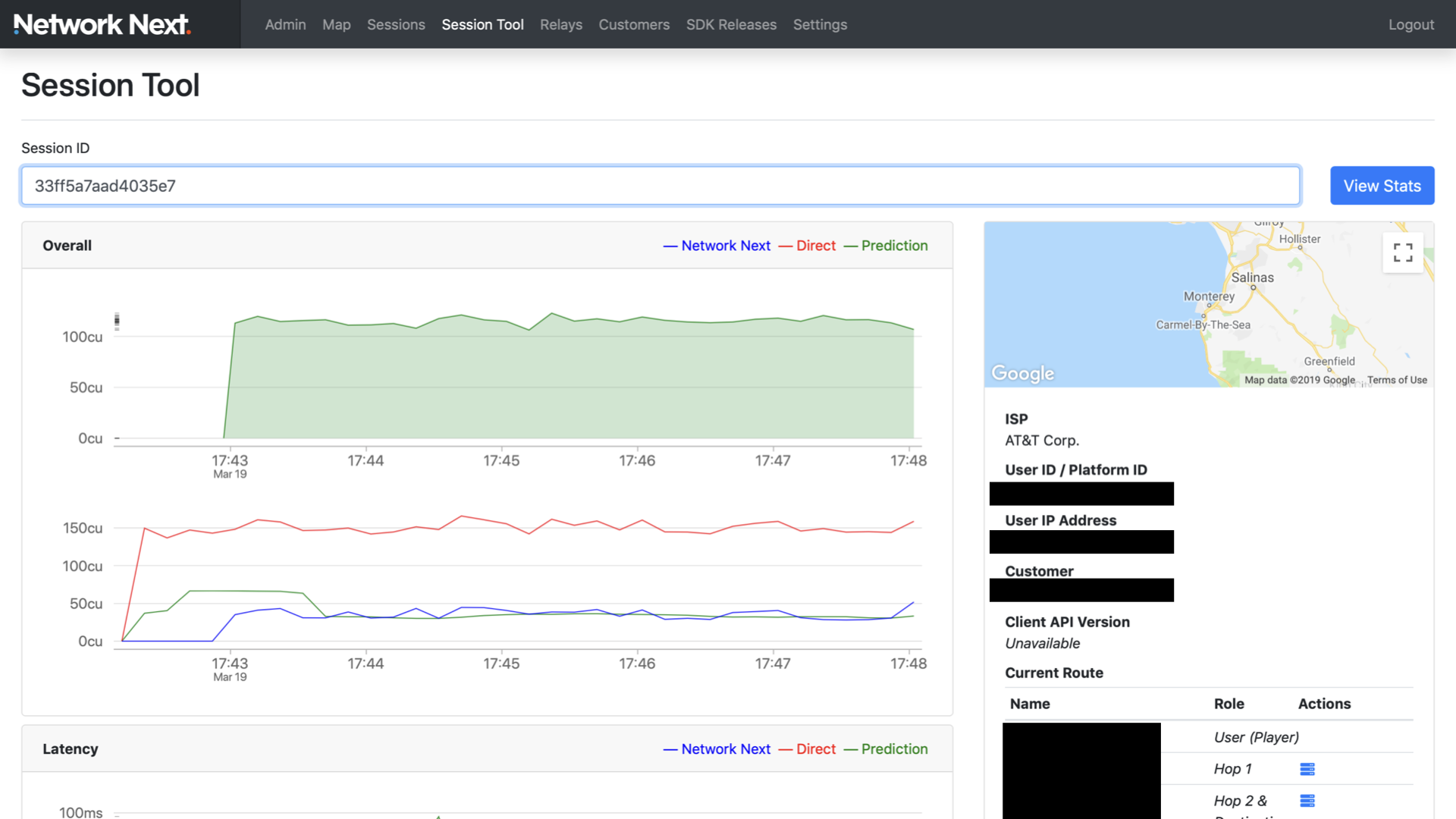

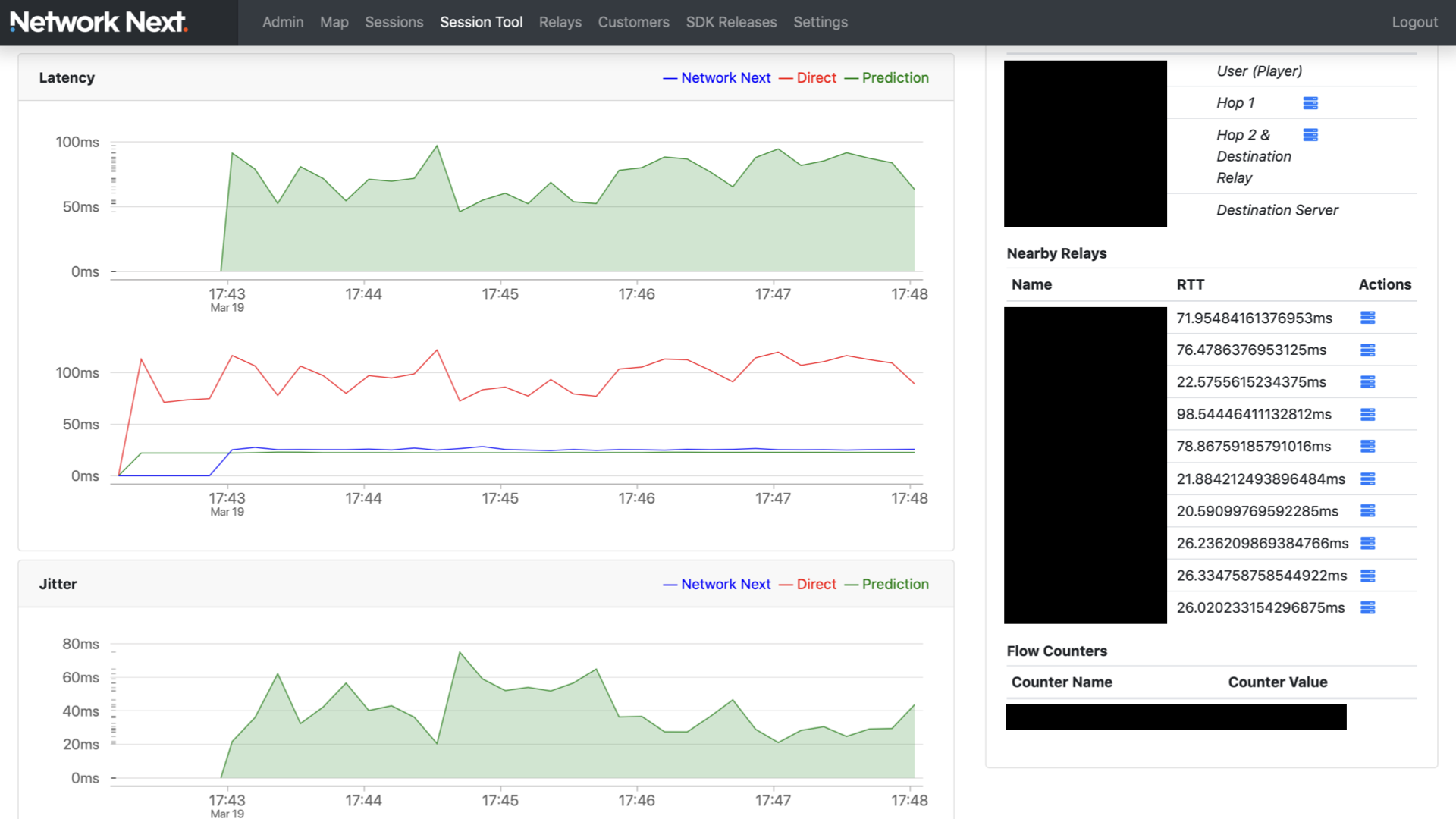

Drilling into the session getting the most improvement, we can see that they are not in the middle of nowhere, they are in Monterey, California…

Here we can see that the improvement is in both latency and jitter. Notice how flat the blue latency line is (Network Next), vs. the red line (public internet) that is going all over the place.

Network Next not only has lower latency, it is also more consistent.

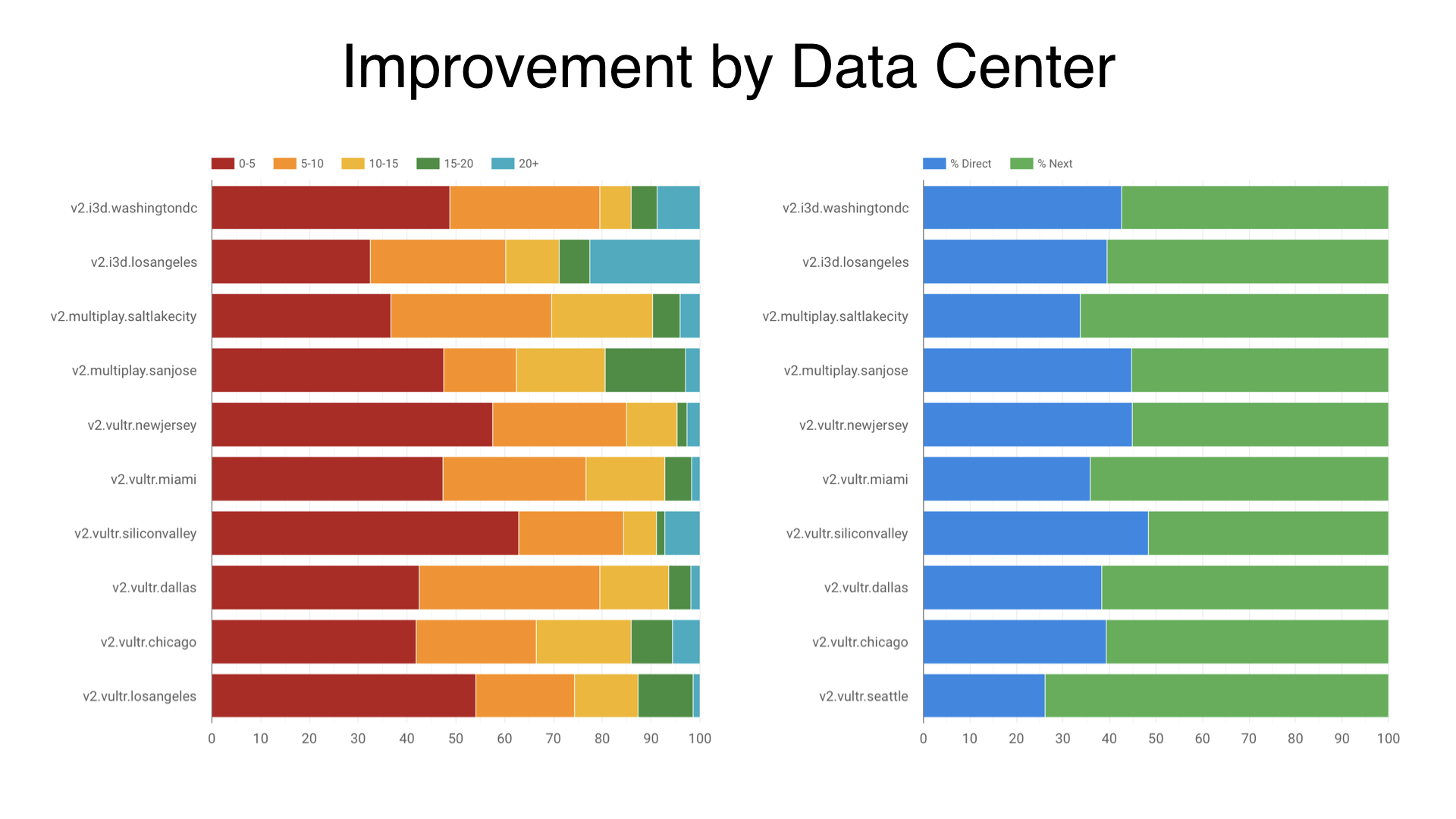

Here is a look at different data centers where our customers run game servers.

On the right is the % of players taking Network Next (getting improvement) to servers in that data center (green), and those not getting improvement and going direct over the public internet (blue).

On the left is the distribution of cost unit improvements for players that are getting improvement on Network Next to servers in that data center. The improvement depends on the peering arrangements of that data center, and on internet weather. It fluctuates somewhat from day to day.

We are able to fix this fluctuation due to internet weather and get the best result at all times.

If you’d like to learn more, please visit us at networknext.com

Hello readers, I’m no longer posting new content on gafferongames.com